Date: Sun, 2 Jun 2013 14:29:49 +0100

Hi,

That is a fair point Scott. Out of curiosity how long did it take to

resolve the hardware issue on the GTX4xx & 5xx?

I reran the benchmark with Amber recompiled and at the latest drivers.

AmberTools version 13.06

Amber version 12.18

Details follow:

*When I run the tests on GPU-01_008:*

1) All the tests (across 2x repeats) finish apart from the following which

have the errors listed:

--------------------------------------------

CELLULOSE_PRODUCTION_NVE - 408,609 atoms PME

Error: unspecified launch failure launching kernel kNLSkinTest

cudaFree GpuBuffer::Deallocate failed unspecified launch failure

2) The sdiff logs indicate that reproducibility across the two repeats is

as follows:

*GB_myoglobin: *Reproducible across 50k steps

*GB_nucleosome:* Reproducible till step 43,200

*GB_TRPCage:* Reproducible across 50k steps

*PME_JAC_production_NVE: *No reproducibility shown from step 1,000 onwards

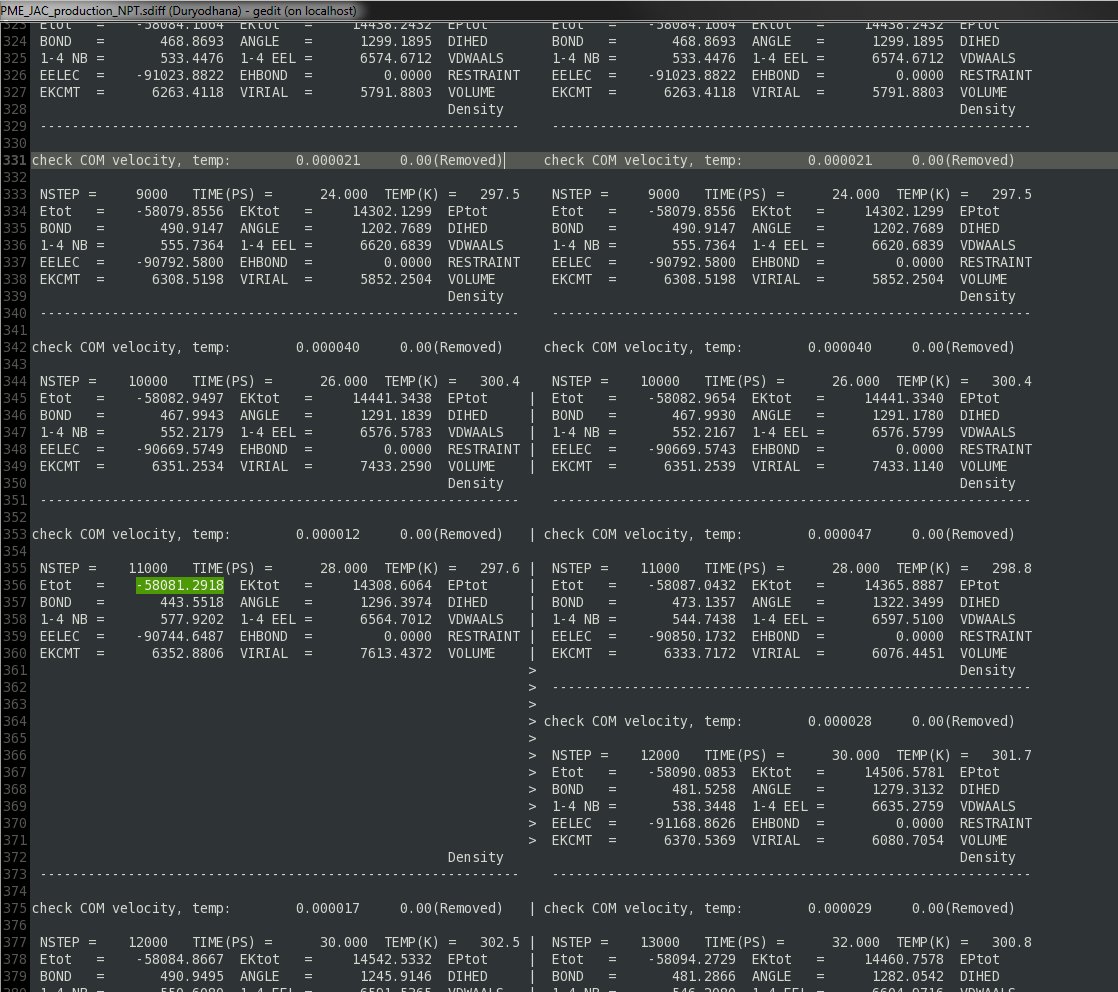

*PME_JAC_production_NPT*: Reproducible till step 1,000. Also outfile is

not written properly - blank gaps appear where something should have been

written

*PME_FactorIX_production_NVE:* Reproducible across 50k steps

*PME_FactorIX_production_NPT:* Reproducible across 50k steps

*PME_Cellulose_production_NVE:* Reproducible till step 1,000. Repeat 1

crashes with error. Failure means that both runs do not finish (see point 1)

*PME_Cellulose_production_NPT: *Reproducible till step 1,000.

#######################################################################################

*When I run the tests on * *GPU-00_TeaNCake:*

*

*

1) All the tests (across 2x repeats) finish:

2) The sdiff logs indicate that reproducibility across the two repeats is

as follows:

*GB_myoglobin:* Reproducible across 50k steps

*GB_nucleosome:* Reproducible across 50k steps

*GB_TRPCage:* Reproducible across 50k steps

*PME_JAC_production_NVE:* No reproducibility shown from step 1,000 onwards.

Also outfile is not written properly - blank gaps appear where something

should have been written.

*PME_JAC_production_NPT: * No reproducibility shown from step 1,000

onwards. Also outfile is not written properly - blank gaps appear where

something should have been written.

*PME_FactorIX_production_NVE:* Reproducible across 50k steps

*PME_FactorIX_production_NPT: *Reproducible across 50k steps

*PME_Cellulose_production_NVE: *Reproducible across 50k steps

*PME_Cellulose_production_NPT: ** *Reproducible across 50k steps

Out files and sdiff files are included as attatchments

#################################################

*My conclusions so far:*

1) Suffice to say that all the GB benchmarks passed again, whilst the PME

benchmarks failed. The performance has improved since updating the driver

and recompiling AMBER, so patch 18 seems to have made some kind of

difference, though this is only based on 1 repeat.

2) GPU0 (aka TeaNcake) seems less likely to fail than GPU1 (aka 008). So I

am not convinced that 008 is entirely error free and plan to take it out

and test it on windows using heaven. At the same time I will do the

repeatability test on just TeaNcake, to establish if this error is specific

to the dual GPU set-up or not.

3) Interestingly, the JAC simulation seems most likely to fail in PME

4) In all the runs that fail, the mdout file is not formatted properly.

Gaps appear around the time reproducibility goes to the wall. I can't help

but think that this symptom is quite significant as to what is going on and

it is def worth looking into. Is anyone else seeing that, or is it just

specific rto my setup? An example from the sdiff log is attached as a pic:

Attachments of PME log & sdiff files are attached.

br,

g

On 2 June 2013 06:45, Scott Le Grand <varelse2005.gmail.com> wrote:

> Don't panic just yet, the game is just beginning here. GTX 4xx and GTX 5xx

> both had a HW bug in the texture unit we ultimately worked around. But the

> first step is uncovering issues like this in the first place...

>

>

>

> On Sat, Jun 1, 2013 at 8:29 PM, ET <sketchfoot.gmail.com> wrote:

>

> > That is very distressing. :(((

> >

> > Just looked at the results from one of the 680's installed on another

> > machine and the results are consistently reproducible across two repeats.

> > Looks like these Titans are going back to the store. Not so sure how that

> > will go.

> >

> > br,

> > g

> > _______________________________________________

> > AMBER mailing list

> > AMBER.ambermd.org

> > http://lists.ambermd.org/mailman/listinfo/amber

> >

> _______________________________________________

> AMBER mailing list

> AMBER.ambermd.org

> http://lists.ambermd.org/mailman/listinfo/amber

>

_______________________________________________

AMBER mailing list

AMBER.ambermd.org

http://lists.ambermd.org/mailman/listinfo/amber

- application/x-gzip attachment: GPU-01_008_try_03_PME_out_plus_diff_Files.tar.gz

- application/x-gzip attachment: GPU-01_TeaNCake_try_03_PME_out_plus_diff_Files.tar.gz

(image/png attachment: gaps-damn-gaps.png)