Date: Mon, 31 Oct 2016 10:37:06 +0800 (GMT+08:00)

Dear Mr/Ms

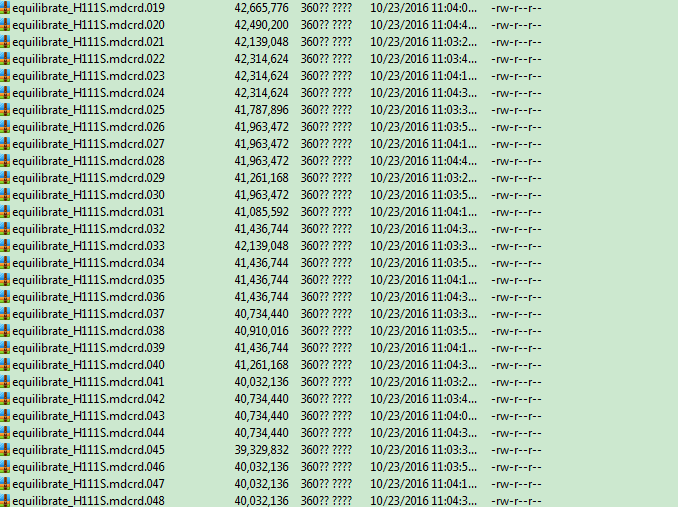

I have carried out REMD simulations and met some problems. When I tried to run equilibration for every replica, some replicas were completed but some were not. The state of the REMD is running, but no further output. The REMD run are stuck as the figure shown (attached),

The job were running, however they were stuck for a long time. Only the first 4 replicas were completed (I have employed 4 GPUS)

The jobs are running 48 MPI processes across 4 nodes which each have 1 GPU card. That means that I ran 12 processes on each GPU card. It looks as if the first process on each GPU card gets to the end of the run and then the rest of the processes hang.

I noticed in the output files that the processes which are on the first node show the correct hostname and the processes on the other nodes show the hostname as "Unknown":

$ grep Hostname: equilibrate_WT.mdout.00{1..4}

equilibrate_WT.mdout.001:| Hostname: gpu1705

equilibrate_WT.mdout.002:| Hostname: Unknown

equilibrate_WT.mdout.003:| Hostname: Unknown

equilibrate_WT.mdout.004:| Hostname: Unknown

I also noticed that the output files show "Peer to Peer support" is enabled:

|---------------- GPU PEER TO PEER INFO -----------------

|

| Peer to Peer support: ENABLED

I disabled the Peer to Peer Support and performed the following 2 tests:

1. run 12 processes on a single GPU with something like:

#PBS -l select=1:ncpus=24:ngpus=1:mpiprocs=12

mpirun -np 12 pmemd.cuda.MPI -ng 12 -groupfile remd.groupfile

The same problem as before

2. Make sure you can run 1 processes on each of 4 GPUs with something like:

#PBS -l select=4:ncpus=24:ngpus=1:mpiprocs=1

mpirun -np 4 pmemd.cuda.MPI -ng 4 -groupfile remd.groupfile

All the trajectories have been completed.

I did some debugging using my 12 processes on 1 node inputs. Attaching a debugger to the first process of a hung job shows that it has finished and is waiting in the MPI_Finalize:

#10 0x00002aaab5754c6a in PMPI_Finalize () at ../../src/mpi/init/finalize.c:260

#11 0x00002aaab5255c9a in pmpi_finalize_ (ierr=0x7fffffff9f58) at ../../src/binding/fortran/mpif_h/finalizef.c:267

#12 0x00000000005120a5 in pmemd_lib_mod_mp_mexit_ ()

#13 0x000000000052f8a8 in MAIN__ ()

#14 0x0000000000407f4e in main ()

Attaching the debugger to one of the other processes shows it waiting in a CUDA synchronization function:

#6 0x00002aaab4f81e29 in cudaThreadSynchronize () from /app/cuda/7.5/lib64/libcudart.so.7.5

#7 0x000000000064236b in kNLSkinTest ()

#8 0x00000000004d5f5c in runmd_mod_mp_runmd_ ()

#9 0x000000000052f129 in MAIN__ ()

#10 0x0000000000407f4e in main ()

We have the NVIDIA Multi-Process Service running on our GPU nodes (http://docs.nvidia.com/deploy/mps/index.html) which enables multiple MPI processes to access the GPU simultaneouslty..

If I temporarily turn off MPS then the calculation completes successfully. However disabling MPS will have a significant perfomance impact and so is not something that we can turn off permanently. Therefore we will need to explore alternative solutions.

My PBS script is

#PBS -N amber_A117V

# ask for 2 chunks of 24 cores and 1 GPU, each running 12 MPI processes with 2 threads each: 2x((12 MPI x 2 OMP) + 1 GPU)

#PBS -l select=4:ncpus=24:mem=96gb

#PBS -q gpu

# modify for required runtime

#PBS -l walltime=120:00:00

# Send standard output and standard error to same output file

#PBS -j oe

# Change directory to directory where job was submitted

cd "$PBS_O_WORKDIR"

# run the executable

mpirun -np 48 pmemd.cuda.MPI -ng 48 -groupfile equilibrate.groupfile

mpirun -np 48 pmemd.cuda.MPI -ng 48 -groupfile remd.groupfile

Thank you

Best regards

Lulu Ning

_______________________________________________

AMBER mailing list

AMBER.ambermd.org

http://lists.ambermd.org/mailman/listinfo/amber

(image/png attachment: Unnamed_QQ_Screenshot20161031103712.png)